With Aimetis' video analytics, it is possible to detect specific events and activities automatically from your cameras without human intervention. Video analytics makes it possible to filter video and only notify you when user defined events have been detected, such as vehicles stopping in an alarm zone, or a person passing through a digital fence. Today's robust video analytics produce far fewer false alarms than the previous motion detection methods employed in earlier DVRs or cameras. Aimetis offers video analytics add-ons on a per camera basis in the form of Video Engines (VE).

In order to receive an alarm in AIRA, three things must be configured:

1) How to select video analytics:

Some analytics can be run concurrently per camera with others (such as VE150 Motion Tracking and VE350 Left Item Detection) but others cannot be run concurrently (such as VE160 People Counting with VE150 Motion Tracking). If the desired video analytics is not selectable, de-select the current analytic engine in order to select any other analytic.

By default, the VE250 algorithm is applied to a new camera added to AIRA (assuming an Enterprise license is available). To run other analytics, perform the following steps:

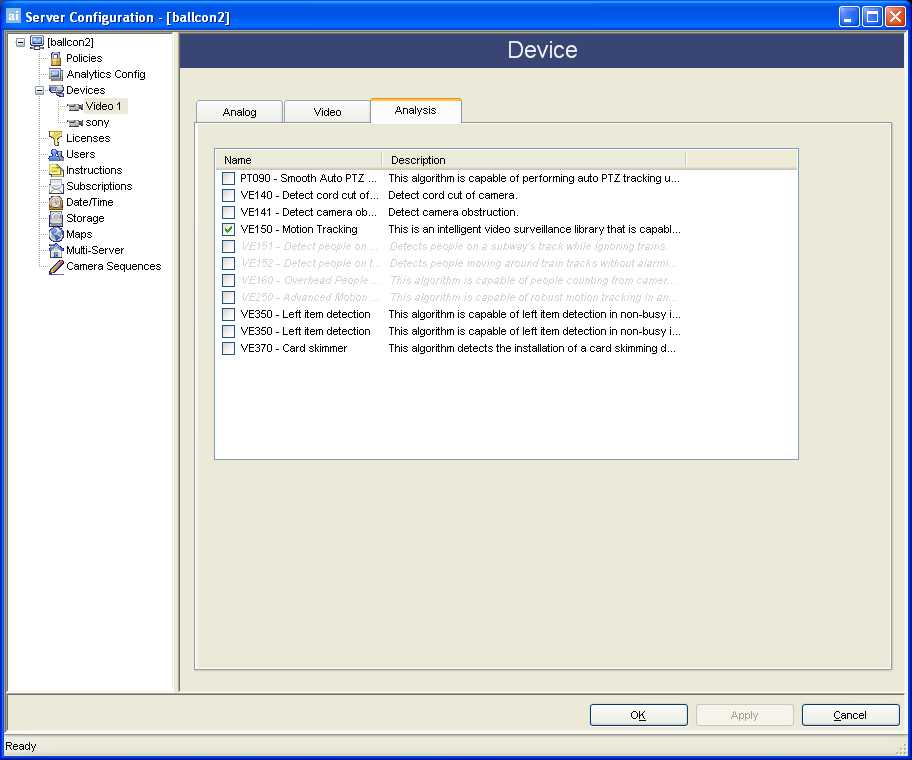

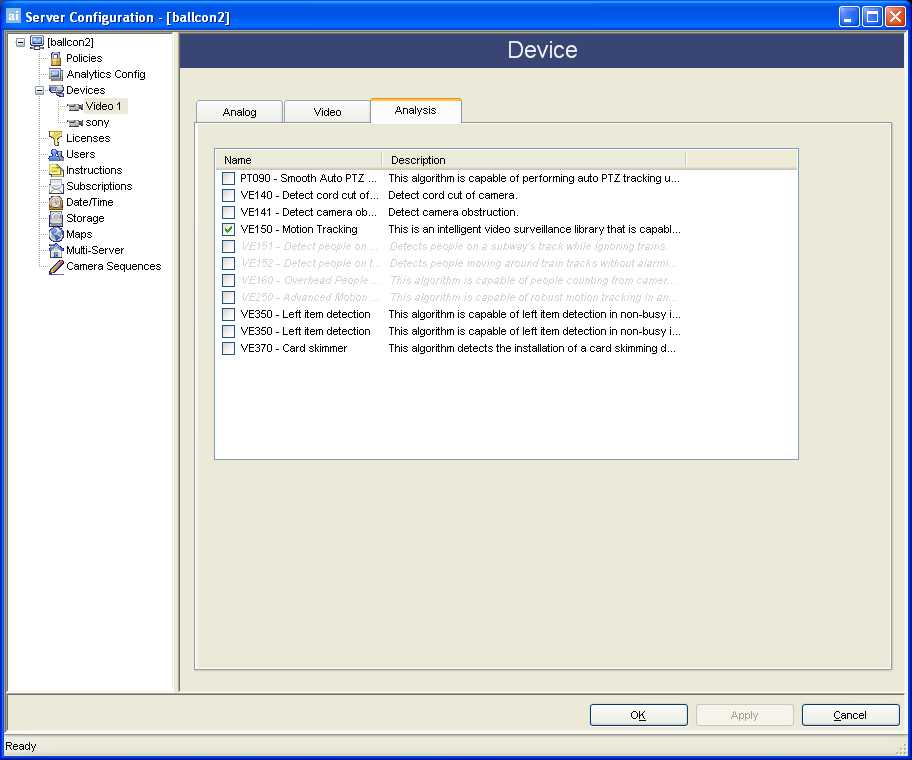

From AIRA Explorer, click Server > Configuration to load the Configuration dialog, and then select Devices from the left pane.

Select the camera you wish to configure for use with video analytics and click Edit.

Click the Analysis tab. Un-check whatever is currently checked (default is VE250) and select the desired video analytic to run on the current camera.

Finally, click Apply to save settings and OK to close dialog.

2) How to configure video analytics:

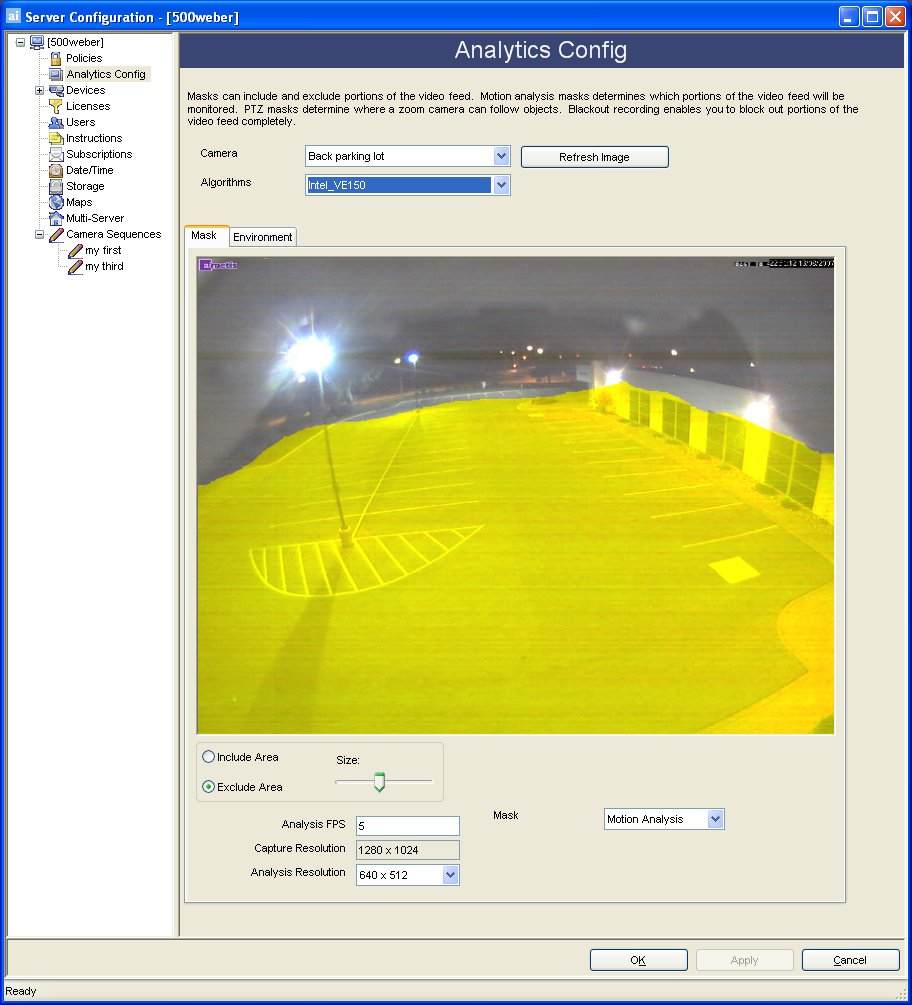

After cameras have been added and analytics have been enabled for cameras, the analytics themselves need to be configured. The Analytics Config dialog allows users to configure the analytics.

To configure analytics, click Server > Configuration and select the Analytics Configuration pane.

Next, select which Camera and which Algorithm to configure (the algorithms that are available on the camera is defined in the steps above).

Configure the analytic.

Each video analytic may have slightly different configuration options, however there are many commonalities. Typically Mask, Analysis FPS / Analysis Resolution and Perspective must be set at a minimum.

Masks

Setting Masks defines where AIRA can track objects. Anytime an object is tracked through the scene, AIRA will colour that portion of the Time Line yellow. By default, the entire scene is covered in the yellow mask, meaning everything in the field of view of the camera will be analyzed. AIRA has been designed to work well in dynamic outdoor environments. Rain or snow would not normally result AIRA in falsely tracking objects. However, in some cases, you may wish to remove certain portions of the screen from analysis (such as a neighbor's property, or a tree which is causing false alarms). The Motion Mask dialog allows you to modify where tracking should and should not occur.

Note: The Motion Mask is different from the

Alarm Mask, defined in the Policy

section. The Alarm Mask defines the area in the video where alarms will

occur, the Motion Mask defines the area of the image where activity is

detected. The Alarm Mask cannot be greater than the Motion Mask. The Alarm

Mask is bounded by the Motion Mask.

Note: The Motion Mask is different from the

Alarm Mask, defined in the Policy

section. The Alarm Mask defines the area in the video where alarms will

occur, the Motion Mask defines the area of the image where activity is

detected. The Alarm Mask cannot be greater than the Motion Mask. The Alarm

Mask is bounded by the Motion Mask.

Analysis and Resolution

Additional features of the analytic can also be configured. The Analysis FPS field allows you to modify the frames per second that the analytic should analyze. Normally this field should be left at the default value. It is possible to record at a higher frame rate than what is analyzed by the analytic engine, to reduce CPU utilization (it is unnecessary to analyze 25 frames per second, for example). The Capture Resolution text box displays the original video size, while the Analysis Resolution combo box allows you to specify the image size to be analyzed. To reduce CPU utilization it is common to record at higher frame rates and resolutions than what the video engine is receiving for analysis.

Perspective

For certain video analytics, Perspective information must be entered for proper functionality. In order for object classification to work properly, for example, Perspective information must be defined. Typically you are required to draw approximately 5 meters long line in the top half of the image, and in the bottom half of the image. These settings need to be as accurate as possible for accurate object classification. For more information on setting Perspective information, visit the individual video analytic pages.

3) How to configure analytics for alarming

In order to receive real-time alarms for specific events, Policies must be configured. In the Policy section, the alarming capability of the analytic is configured. For more information on Policies visit the Policies section. For specific alarming capabilities of the analytic, visit the desired analytic section below.

See Also

VE161 45-degree people counting